Vector Databases: AI’s Core for Modern Apps

The traditional relational database, with its structured tables and rows, has been the backbone of software for decades. But as AI models became more sophisticated, particularly with the rise of deep learning, a new kind of database emerged, “Vector Databases” along with new data type emerged: embeddings.

What are Embeddings, and Why Are They Important?

At its core, an embedding is a numerical representation of an object (like text, images, audio, or even entire concepts) in a high-dimensional space. Think of it like a sophisticated coordinate system where similar objects are located closer to each other, and dissimilar objects are further apart.

Here’s why they’re critical for modern AI:

- Semantic Understanding: Embeddings capture the meaning and relationships between data points, something traditional databases struggle with. For example, in a traditional database, “car” and “automobile” are just different strings. As embeddings, they would be incredibly close in vector space, reflecting their semantic similarity.

- Machine Learning Input: Most modern machine learning models (especially deep learning) operate on numerical data. Embeddings provide this numerical representation, allowing models to process and understand complex, unstructured data.

- Efficiency in Search & Retrieval: Instead of keyword matching, AI applications can now perform “similarity search” based on the underlying meaning. This is where vector databases shine.

The Challenge: Storing and Querying Embeddings

Imagine you have millions, even billions, of these high-dimensional vectors. How do you store them efficiently, and more importantly, how do you quickly find the “most similar” vectors to a given query vector?

Traditional databases are not optimized for this. Their indexing mechanisms (like B-trees) are designed for exact matches or range queries on discrete values. They simply cannot efficiently handle the complex “nearest neighbor” or “similarity” queries required for embeddings.

This is where vector databases come into play.

What is a Vector Database?

A vector database is a specialized database designed from the ground up to store, index, and query high-dimensional vectors. Their core functionality revolves around Approximate Nearest Neighbor (ANN) search.

Unlike exact nearest neighbor search (which is computationally expensive in high dimensions), ANN algorithms sacrifice a tiny bit of accuracy for massive gains in speed and scalability. They achieve this by using techniques like:

- Locality Sensitive Hashing (LSH): Hashing similar items to the same “buckets.”

- Tree-based indexes (e.g., KD-Trees, Annoy): Partitioning the vector space to narrow down search areas.

- Graph-based indexes (e.g., HNSW – Hierarchical Navigable Small World): Building a graph structure where nodes are vectors and edges connect similar vectors, allowing for efficient traversal.

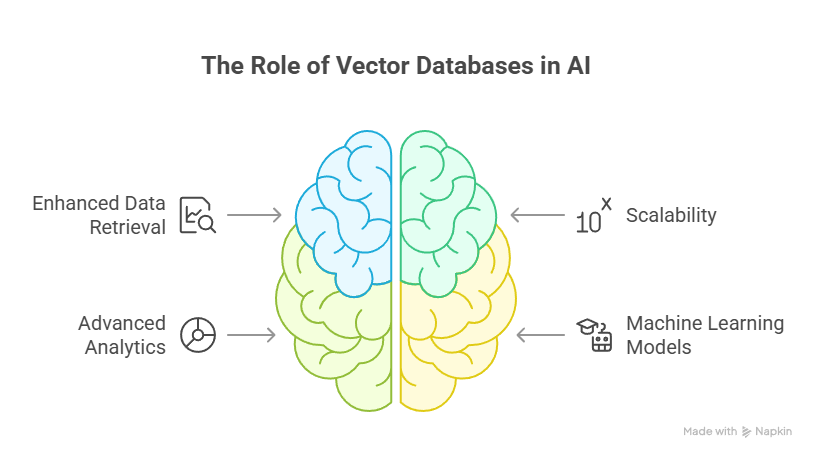

Why Vector Databases Matter for Modern AI Applications

The implications of efficient vector storage and retrieval are profound across various AI domains:

- Semantic Search & Recommendation Systems:

- Generative AI & Large Language Models (LLMs):

- Image and Video Recognition:

- Anomaly Detection:

- Drug Discovery & Bioinformatics:

The Database/AI Convergence

The rise of vector databases signifies a powerful convergence between the database and AI fields. No longer are databases merely storage repositories; they are becoming active participants in the AI pipeline, enabling smarter and more efficient AI applications.

As AI models continue to grow in complexity and scale, the ability to manage and query vast amounts of high-dimensional vector data will only become more critical. Vector databases are not just a trend; they are a fundamental component of the modern AI infrastructure, unlocking new possibilities and pushing the boundaries of what AI can achieve.